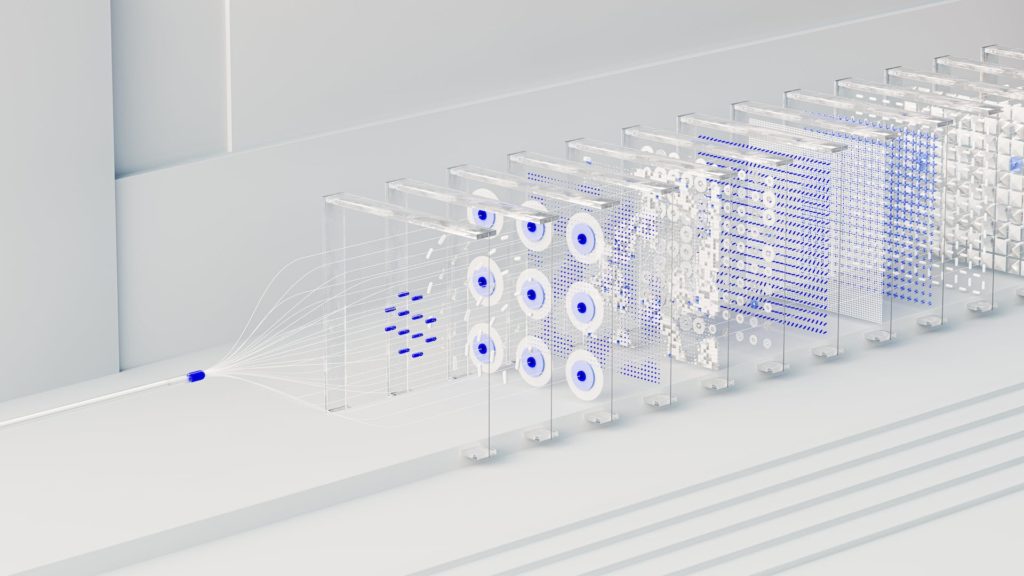

Distributed cloud refers to the distribution of cloud services to different physical locations while retaining a centralized management structure. Here are the key points to understand distributed cloud:

Geographical Distribution:

- Cloud services are distributed across multiple locations or regions.

- It involves deploying cloud resources across various data centers or edge locations.

Centralized Management:

- Despite being distributed, the management and control of these resources remain centralized.

- Central governance ensures consistent policies, security measures, and administration across all distributed locations.

Improved Latency and Performance:

- By placing resources closer to end-users or devices, it reduces latency and enhances performance for applications and services.

- Edge locations facilitate faster data processing and response times due to proximity.

Enhanced Reliability and Resilience:

- Redundancy across distributed locations improves reliability.

- If one region or data center faces an issue, services can be maintained or shifted to other available locations, ensuring continuous operations.

Data Sovereignty and Compliance:

- Enables adherence to data sovereignty regulations by allowing data to be stored in specific geographic locations as required by regulations.

Scalability and Flexibility:

- Offers scalability by allowing resources to be provisioned or scaled according to demand across different locations.

- Provides flexibility in resource allocation based on varying needs in different regions or for specific applications.

Edge Computing Integration:

- Integrates with edge computing technologies, enabling processing and analysis of data closer to where it’s generated (at the edge), reducing the need for data transmission to centralized servers.

Security Measures:

- Implements consistent security measures and protocols across all distributed locations to ensure data integrity and protect against cyber threats.

Cost Optimization:

- Optimizes costs by strategically distributing resources, potentially reducing data transfer costs and improving resource utilization based on regional demand.

Hybrid Cloud Capabilities:

- Integrates with hybrid cloud environments, allowing seamless movement of data and applications between on-premises infrastructure, private, and distributed cloud resources.

Distributed cloud architecture offers a balance between centralized control and decentralized execution, providing advantages in terms of performance, reliability, compliance, and scalability.

Here’s an overview of Artificial Intelligence (AI):

Definition of AI:

- Artificial Intelligence refers to the simulation of human intelligence in machines programmed to think, learn, and perform tasks that typically require human intelligence.

Types of AI:

- Narrow/Weak AI: Designed for specific tasks; operates within a limited context. Examples include virtual assistants, image recognition systems, and chatbots.

- General AI (AGI): Possesses human-like intelligence and abilities, capable of understanding, learning, reasoning, and problem-solving across diverse tasks.

- Superintelligent AI: Hypothetical AI surpassing human intelligence across all domains and capabilities.

AI Techniques and Subfields:

- Machine Learning (ML): Subset of AI enabling systems to learn and improve from experience without explicit programming.

- Deep Learning: Utilizes neural networks with multiple layers to learn representations of data, enabling complex pattern recognition and decision-making.

- Natural Language Processing (NLP): AI discipline enabling machines to understand, interpret, and generate human language.

- Computer Vision: Focuses on enabling machines to interpret and understand visual information from images or videos.

- Robotics: Integrates AI with mechanical systems to create intelligent robots capable of performing tasks in various environments.

Applications of AI:

- Healthcare: Diagnosis, personalized treatment, drug discovery, and medical image analysis.

- Finance: Fraud detection, algorithmic trading, risk assessment, and customer service.

- Autonomous Vehicles: Self-driving cars and drones that use AI for navigation and decision-making.

- Retail: Recommendation systems, inventory management, and personalized shopping experiences.

- Cybersecurity: Threat detection, anomaly identification, and automated response systems.

- Entertainment: Gaming, content recommendation, and AI-generated media.

Ethical Considerations:

- Bias and Fairness: AI systems can inherit biases from training data, leading to unfair or discriminatory outcomes.

- Privacy Concerns: Collection and analysis of vast amounts of personal data raise privacy and surveillance concerns.

- Job Displacement: Automation through AI might lead to job displacement, necessitating re-skilling and job redefinition.

Challenges in AI Development:

- Data Quality and Quantity: Access to high-quality and diverse datasets is crucial for training robust AI models.

- Interpretability and Transparency: Understanding and interpreting complex AI decision-making processes.

- Ethical and Legal Frameworks: Establishing ethical guidelines and regulations for responsible AI development and deployment.

Future Trends:

- AI and IoT Integration: AI-powered devices and sensors in interconnected systems.

- Explainable AI: Emphasizing the interpretability of AI models to understand decision-making processes.

- AI Democratization: Making AI tools and technologies accessible to a broader range of users and industries.

Artificial Intelligence continues to evolve, impacting various industries and aspects of daily life while posing challenges that necessitate ethical considerations and continuous technological advancements.

Here’s an overview of Sustainable Technology:

Definition of Sustainable Technology:

- Sustainable technology refers to the use of innovative methods, materials, and systems that aim to minimize environmental impact, conserve resources, and promote long-term ecological balance.

Key Principles:

- Environmental Impact Reduction: Technologies designed to lower carbon emissions, pollution, and resource consumption.

- Resource Conservation: Efficient use of energy, water, and materials to minimize waste and environmental degradation.

- Renewable Energy Sources: Emphasis on harnessing renewable sources like solar, wind, hydro, and geothermal energy.

Types of Sustainable Technologies:

- Renewable Energy Systems: Solar panels, wind turbines, hydroelectric power, geothermal systems, and biomass energy production.

- Energy Efficiency Solutions: Energy-efficient appliances, smart buildings, LED lighting, and energy management systems.

- Green Building Technologies: Sustainable construction materials, passive design strategies, and green roofs for energy conservation.

- Waste Management Innovations: Recycling technologies, waste-to-energy processes, and circular economy models to minimize waste.

- Water Conservation Technologies: Efficient irrigation systems, water recycling, and purification technologies.

Applications and Benefits:

- Climate Change Mitigation: Reducing greenhouse gas emissions and combating global warming by promoting clean energy adoption.

- Resource Conservation: Conserving natural resources like water, minerals, and fossil fuels through efficient technologies.

- Environmental Preservation: Minimizing pollution, protecting ecosystems, and preserving biodiversity.

- Economic Advantages: Cost savings through energy efficiency, reduced waste, and long-term sustainability.

Challenges and Considerations:

- Cost and Affordability: Initial investment and perceived higher costs might hinder the widespread adoption of sustainable technologies.

- Infrastructure and Implementation: Adapting existing infrastructure and deploying new technologies at scale.

- Policy and Regulation: The need for supportive policies and regulations to incentivize and promote sustainable technology adoption.

- Education and Awareness: Raising awareness and educating communities about the importance and benefits of sustainable practices.

Future Trends:

- Advancements in Renewable Energy: Innovations in solar, wind, and other renewable energy sources to improve efficiency and affordability.

- Smart Grid Technologies: Integration of IoT and AI for efficient energy distribution and management.

- Circular Economy Models: Emphasizing recycling, reusing, and remanufacturing to create closed-loop systems.

- Climate Tech Innovations: Technologies focused on carbon capture, climate adaptation, and sustainable agriculture.

Sustainable technology plays a pivotal role in addressing environmental challenges, promoting eco-friendly practices, and contributing to a more sustainable and resilient future for generations to come.

Here’s an overview of Quantum Computing explained in bulleted points:

Definition of Quantum Computing:

- Quantum computing is a field of computing that utilizes principles from quantum mechanics to perform computations. It harnesses quantum bits or qubits, which can exist in multiple states simultaneously, enabling parallel computations.

Basic Principles:

- Qubits: Quantum bits are the fundamental units in quantum computing. Unlike classical bits, qubits can exist in a superposition of states, representing both 0 and 1 simultaneously.

- Quantum Entanglement: Qubits can be entangled, meaning the state of one qubit can instantly influence the state of another, even when separated by vast distances.

Key Concepts:

- Superposition: Qubits in superposition can compute multiple possibilities simultaneously, vastly increasing computational power for certain problems.

- Quantum Parallelism: Allows quantum computers to solve certain problems exponentially faster than classical computers by exploring multiple solutions simultaneously.

- Quantum Interference: Utilizing the interference patterns of qubits to arrive at the correct solution while canceling out incorrect possibilities.

Types of Quantum Computing:

- Gate-Based Quantum Computing: Utilizes quantum gates to manipulate qubits, similar to classical logic gates in conventional computers.

- Quantum Annealing: Focuses on optimization problems by finding the lowest-energy state of a quantum system.

Applications and Potential Use Cases:

- Cryptography and Security: Developing quantum-resistant encryption algorithms while also enhancing encryption methods.

- Drug Discovery: Simulating molecular interactions for drug development and material science, accelerating research processes.

- Optimization Problems: Solving complex optimization problems in various industries such as logistics, finance, and manufacturing.

- Machine Learning: Enhancing machine learning algorithms and pattern recognition by leveraging quantum computing’s computational power.

Challenges and Limitations:

- Error Correction: Maintaining qubits’ fragile quantum states and minimizing errors caused by decoherence and noise.

- Scalability: Building larger and more stable quantum systems with increased qubit counts while reducing error rates.

- Hardware Development: Developing reliable and practical quantum hardware, including qubits and control systems.

Current State and Future Trends:

- Research and Development: Ongoing advancements in qubit technologies, error correction methods, and quantum algorithms.

- Commercialization: Transitioning from experimental prototypes to practical, commercially viable quantum computers.

- Hybrid Approaches: Integrating classical and quantum computing to solve problems more efficiently.

Quantum computing holds immense promise for revolutionizing various industries and solving complex problems that are intractable for classical computers, although significant technological and theoretical challenges remain before its widespread adoption.

Here’s an overview of Augmented Reality (AR) explained in bulleted points:

Definition of Augmented Reality:

- Augmented Reality is a technology that overlays digital information, such as images, videos, or 3D models, onto the real world, enhancing the user’s perception by blending digital content with the physical environment.

Key Components of AR:

- Hardware: Devices used for AR experiences include smartphones, tablets, smart glasses, and headsets equipped with cameras, sensors, and displays.

- Software: AR applications and platforms create, manage, and deliver digital content overlaid onto the real world.

Types of Augmented Reality:

- Marker-based AR: Relies on specific markers or codes in the physical environment to trigger and display digital content when recognized by a camera-equipped device.

- Markerless AR: Does not require markers; uses GPS, accelerometers, gyroscopes, and computer vision to overlay digital content in the real world.

- Projection-based AR: Projects digital content directly onto physical surfaces, creating interactive and immersive experiences.

Applications and Use Cases:

- Entertainment and Gaming: Popularized by AR games like Pokémon GO, enabling interactive gaming experiences in real-world environments.

- Retail and Marketing: Allows customers to visualize products in their real environment before making purchases through AR apps.

- Education and Training: Enhances learning experiences by providing interactive and immersive educational content.

- Healthcare: AR assists in medical training, surgical simulations, and patient treatment by displaying medical information or aiding in diagnostics.

- Industrial and Manufacturing: AR guides workers with real-time information, instructions, and visual aids for complex tasks and maintenance.

Technological Components:

- Sensors: Cameras, GPS, accelerometers, gyroscopes, and depth sensors capture the real-world environment for AR applications.

- Computer Vision: Algorithms and technologies that enable devices to recognize and understand the surrounding environment.

- Display Systems: AR presents digital content through headsets, glasses, or smartphone screens, seamlessly blending virtual and real elements.

Challenges and Limitations:

- Hardware Limitations: Devices may have limited processing power, battery life, and field of view, impacting the quality and duration of AR experiences.

- Content Creation: Developing high-quality and contextually relevant AR content can be resource-intensive and complex.

- User Acceptance and Interaction: Ensuring intuitive and user-friendly interactions to enhance adoption and usability.

Future Trends:

- Advancements in Hardware: Improved AR devices with enhanced performance, battery life, and comfort for users.

- AR Cloud Development: Building shared AR experiences and persistent digital content that can be accessed by multiple users in the same physical space.

- Integration with AI and IoT: Leveraging artificial intelligence and Internet of Things to create smarter and more context-aware AR experiences.

Augmented Reality continues to evolve, offering a wide array of applications across industries and transforming how users interact with and perceive the world around them.